Experiential Design - Weekly Note

Week 01 (23/04/25)

Class Introduction & Assignment Brief

MVP = Minimum Viable Product

- Focus on the main feature of your app.

- It can include a mix of wireframes but must showcase the core functionality in a working form.

Vertical Slide

- Must be fully completed.

- Should include all animations, UI elements, buttons, and visuals.

- The outcome needs to be fully functional.

Presentation Video

- All assignments must be submitted with a presentation video.

Main Focus

- Prioritize User Experience (UX) throughout the project.

Type of Experience (Choose One or Combine)

- Tour ,Space ,Education ,Turn-by-turn navigation (directions)

- It's not mandatory to focus only on scanning or interface—feel free to combine both.

Tools Required - Unity and Vuforia

Week 02 (30/04/25)

Designing Experience - AR design & Development

What is Experiential Design?

- Involves user mapping and journey mapping

- Focuses on designing meaningful user experiences

Key Terms:

- XD (Experience Design):How the overall experience is designed, especially in immersive environments like AR/VR.

- UX (User Experience):Primarily 2D design; focuses on usability and user interaction in digital products.

- CX (Customer Experience):Involves every interaction a customer has with a product or brand. Customers can also be users.

- BX (Brand Experience):Similar to CX, but focuses specifically on experiences related to the brand, often tied to company culture and identity.

- IA (Information Architecture):A planning stage that comes before wireframing. Helps organize and structure content effectively.

Difference Between UX and XD:

- UX = 2D interface and interaction

- XD = 3D or immersive experience design

Types of User Mapping:

- Empathy Map

- Helps understand the user in depth

- Covers what the user thinks, feels, says, and does

- Example format only – can be customized

- Customer Journey Map

- Tracks the user's journey with the product/service

- No strict format – can be tailored to fit the context

- Experience Map

- Broader than the customer journey map

- Maps general human behavior or activity in a given context

- Service Blueprint

- Visual representation of service interactions

- Includes frontstage (user-facing) and backstage (behind-the-scenes) actions

Suggested UI/UX Resource:

NNGROUP – Nielsen Norman Group (recommended for learning UI/UX )

Week 03 (07/05/25)

Introduction to XR (Extended Reality)

- XR is an umbrella term that includes AR (Augmented Reality), MR (Mixed Reality), and VR (Virtual Reality).

- These technologies differ in terms of immersion and sense of presence — two separate concepts:

- Immersion: How much you're drawn into the digital content.

- Sense of presence: The feeling of "being there" in a virtual or digital environment.

Types of XR (From Less to More Immersive)

1. Augmented Reality (AR)

- Definition: Combines the real world with virtual objects.

- Function: Extends visual perception by overlaying digital elements onto the physical world.

- Device Example: Mobile phones and AR glasses.

- Example Use: Viewing extra product info through your phone’s camera.

- Types of AR:

- Marker-based AR: Requires a visual marker (e.g., QR code) to trigger digital content.

- Markerless AR: Uses GPS, sensors, or object recognition to place digital elements without a marker.

2. Mixed Reality (MR)

- Definition: Enhances interaction by allowing digital objects to recognize and respond to the real-world environment.

- Function: Digital objects interact with real-world physics and surroundings.

- Example: A digital ball bouncing off a real table.

3. Virtual Reality (VR)

- Definition: A fully computer-generated world that creates a sense of presence.

- Function: Extends experience by immersing the user in a fully virtual environment.

- Example: Standing on top of the Twin Towers in VR — something not possible in real life.

Additional Notes

- Projection Mapping: Can be considered an AR experience, especially in shared public spaces.

- Mobile Phones in XR: Mainly used in AR to extend information and visuals.

Class Activity 02: Enhancing a Space with AR

Chosen Place: Hair salon

- Idea: Our group decided to create a smart mirror that allows users to visualize different hairstyles in real-time. This helps hairstylists clearly understand what the customer wants, reducing the chances of miscommunication.

Problem Statement:

- There is often miscommunication between hairstylists and customers, leading to unsatisfactory results.

Solution:

- We propose a smart mirror that allows both the customer and the hairstylist to visualize and confirm hairstyle choices before cutting or coloring begins.

How It Works:

- The smart mirror will first scan the user's face to detect facial features and position.

- Users can then browse and select hairstyles to preview on their reflection.

- After choosing a hairstyle, users can adjust the hair color and view how it would look in real-time.

- The mirror will also show how much hair needs to be cut to achieve the selected style.

- This system ensures that the customer and stylist have a shared understanding of the desired result, minimizing confusion and enhancing customer satisfaction.

Week 04 (14/05/25)

Continue On Unity - Last Week Play Around with logic

Began by changing the build settings to Android or iOS by selecting "Switch Platform".

Important Note:

- When adding UI/Canvas elements, you must include an Event System for the UI to function properly!

Render Modes Explained:

- Overlay: The UI is rendered on top of everything else.

- Camera: The UI is rendered within the camera view.

- World Space: Used when you want the UI to follow the camera in the real world.

Class Activity Summary:

In today's class, we did some hands-on practice in Unity by creating buttons for two elements (a cube and a sphere) and experimented with logic. We created four different versions, each with different button functionalities:

- Version 1: Hide and show an object using a button.

- Version 2: Use a single button to start and stop an animation. The button text changes to “Start” or “Stop” depending on the state. Only one button is displayed on the screen.

- Version 3: Use two sets of buttons to control animations independently—one set controls the cube, the other controls the sphere.

- Version 4: Use one set of buttons to control all animations (start and stop both cube and sphere animations at once).

In today’s class, we focused on exploring and experimenting with Unity's logic features without writing any code. We used buttons to make objects hide, move, pause, and resume. It was fun to see how these simple actions could control what happens in the scene just by assigning functions to buttons.

Although the tasks weren’t very complicated, I still felt really happy and satisfied with the results. The biggest difficulty in this class was the understanding the logic flow part, as I sometimes found it a bit confusing. However, I believe that as the semester progresses, I will get more used to it. Overall, this class taught me how much can be done in Unity even without coding.

Week 05 (21/05/25)

Unity Learning

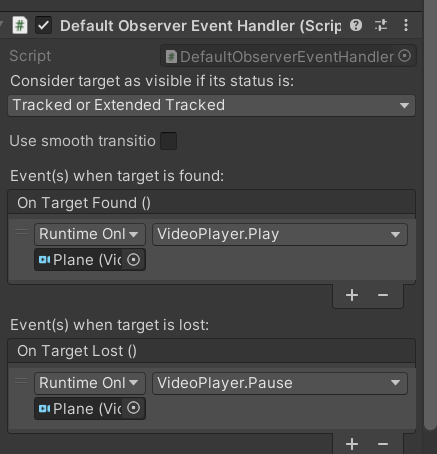

This week in class, we explored Unity by adding a video player and controlling it using buttons, experimenting with logic, and coding the functionality.

- Imported and assigned the video to the Video Clip section.

- Add a Script machine

- Change to Embedded

- Add OnMouseDown and Use Destroy to Remove the Object

- In the script, use OnMouseDown to detect the mouse click, then use Destroy() to make the object disappear.

- Link the together

In today's class, we explored how to add videos into Unity. We learned how to make a video appear and play when a user scans an image, with the ability to play and pause the video. We also learned about Script Machines and how to use them. In class, we used them to create a 3-click action where the object gets destroyed after the third click, also after click 3 times of the button it will follow by an explosion effect.

Additionally, we have also learned how to import assets from the Unity Asset Store into our project. Overall, I learned many new things today, especially different methods of working with buttons and adding various effects in Unity.

Week 06 (28/04/25)

Transform between Scene

- 1. Open a new scene.

- 2. Go to the Build Settings.

- 3. Reorganize the scene sequence:

- If the scene is not listed in the Build Settings, drag it into the list.

- 4. Create a Canvas:

- Inside the Canvas, create an Image.

- Set the Image to fill the whole screen.

- Add a Button to the Canvas.

- 5. Write a Script for the button action .

- 6. Assign the Button’s OnClick Event:

- Select the Button in the hierarchy.

- In the Inspector under OnClick, click the + button to add a new event.

- Drag the Canvas (or relevant GameObject that holds the script) into the object field.

- Choose the script from the dropdown.

- Then select the function created (GotoARScene).

- 7. Test the Button to see if it works as expected.

Exercise for This Week:

Create a panel that will appear when a cube is clicked. Also, link all scenes together.

Expected Outcome:

- When the cube is clicked, a panel appears on the screen.

- The panel may contain buttons or options.

- Each button should link to a different scene.

- All scenes are connected and navigable through the UI.

This week, we learned several methods of linking scenes together using scripts. One method involves writing the code directly in the script, while another allows us to link scenes through the Inspector in Unity. Additionally, we were taught how to create and display a panel, which is very useful for our final project. The ability to show or hide UI elements like panels based on user interaction—such as clicking on a cube—adds interactivity and improves the user experience in our application.

Week 07 (04/06/25)

Connecting With Unity and android Phone

- Open the phone’s settings and navigate to “Version Number” or “Build Settings.”

- Tap multiple times to enable developer mode.

- Go to the developer settings and turn on “USB Debugging.”

- Go back to Unity and edit the “Player Settings” under “Build Settings.”

- After all done Select " Build and Run"

- Than Press start game in unity (it will than connect to your phone can you can experience Ar with phone)

Week 08 (11/06/25)

- Learning on how to scan ground > detect ground top and a object appear

- scan to get 3d modeling and also top to enter real word scale / interior (Provide video link by lecture not doing in class)

Class YouTube Link - Class Recording

Class Outcome (Video):

This week, I didn’t really know what was happening because nothing seemed to work for me. My Unity kept showing unknown errors and bugs, even though I had nothing in my scene. I felt very lost this week. After trying different things, the lecturer suggested it might be a problem with Vuforia. And recommended me to download an older version of it and test it again.

Week 09 (18/06/25)

How to import a 3D modeling environment into Unity and make it life-sized.

Week 10 (25/06/25)

Based on last week's file, create a UI button for the building and embed a video that can play/pause. Also, when the wall is clicked, the video will appear.

Comments

Post a Comment